How to Get High Quality Stems: The Complete Guide to AI Stem Splitting

Every music producer, DJ, and content creator has faced the same frustrating problem. You need that perfect acapella for a remix, a drumless track for practice, or an isolated guitar riff for sampling. But traditional stem splitting leaves you with muddy vocals, ghostly drum artifacts, and disappointing results.

What if you could extract any instrument from any song with improved clarity? Modern AI stem splitting services have made this possible. By combining Meta's SAM Audio with the established capabilities of Demucs, you can now isolate vocals, drums, bass, or custom instruments with higher fidelity than previous methods allowed.

In this guide, you'll learn exactly what are stems, why most stem splitters introduce artifacts, and how to achieve high quality stems that work in real production scenarios. Whether you're extracting better SUNO stems or creating clean instrument tracks for remixes, this is your technical roadmap to improved audio separation.

What Are Stems? Understanding Audio Separation Basics

Before diving into advanced techniques, let's answer the fundamental question: what are stems?

Stems are individual audio tracks extracted from a mixed song. Think of them as the building blocks of a recording. Instead of one stereo file containing everything, you get separate files for vocals, drums, bass, piano, and other instruments. Each stem contains only that specific element.

Note that there are dry stems (without any audio effects) and wet stems (with audio effects applied, such as reverb, compression, and so on). Dry stems are like ingredients before being cooked, while wet stems are the dishes in your meal. In this article, the models we mention can only extract wet stems, i.e. with effects applied.

Why Stems Matter for Modern Music Production

Stems transform a static stereo mix into flexible parts. When you have high quality stems, you can:

- Create karaoke tracks by removing vocals

- Produce mashups with clean acapella isolation

- Rebalance mixes by adjusting individual instrument levels

- Learn songs by soloing specific parts

- Sample elements without bleed from other instruments

- Fix problematic frequencies in isolated tracks

Traditional stem splitting relied on frequency masking and phase cancellation, which created artifacts and never achieved true isolation. AI changed this by learning to recognize timbre and harmonic structure rather than just filtering frequencies.

The Problem with Most Stem Splitting Services

Not all AI stem splitters deliver equal results. Many services promise "high quality" but compromise on technical fundamentals:

Playgounds vs Tools

Meta's SAM Audio playground, while technically advanced, can only process 30 second clips in mono. This works for demos but fails when you need full song processing in stereo.

Single Model Limitations

Many services use just one AI model, forcing trade-offs. Demucs excels at preset separations (vocals, drums, bass, other) but can't extract custom instruments like saxophone or synthesizer. Other models might offer custom extraction but lack the separation quality of specialized tools.

No Audio Restoration Pipeline

Stem extraction from compressed sources like MP3 or streaming platforms reveals existing artifacts. MP3s cut frequencies above 16 kHz, add quantization noise, and reduce dynamic range. A stem splitter that doesn't address these issues simply isolates damaged audio more clearly.

How SAM Audio Advances Stem Splitting

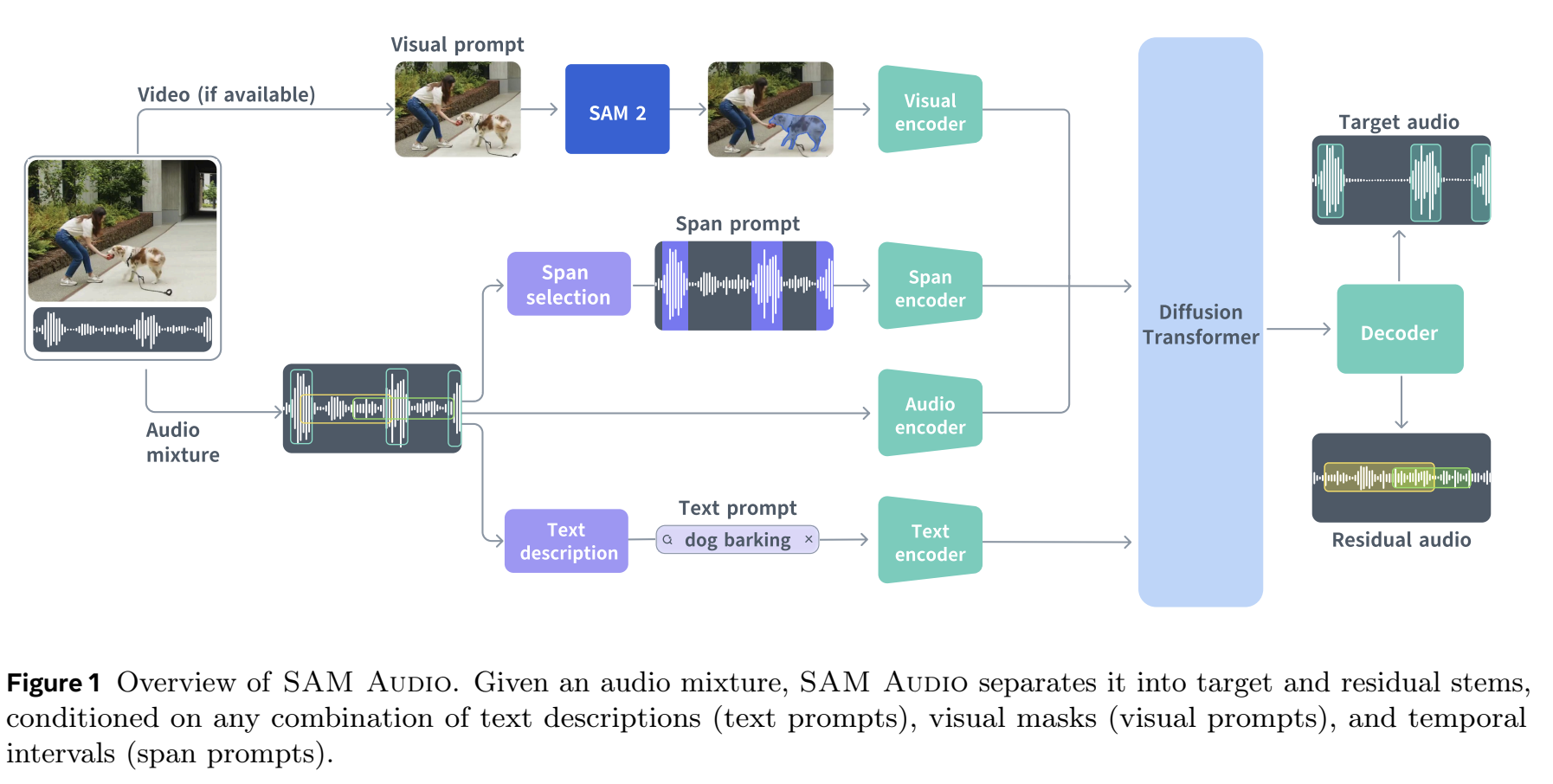

Meta's SAM Audio represents a technical leap in audio separation. As the first unified multimodal model for audio, it processes sound through multiple input types, such as text prompting, but also images and spans (timestamps inside the audio). This results in top performances for many tasks, among which stem separation.

See the Difference: SAM Audio vs. Industry Standards

Below is a real-world comparison. We took a complex classical mix and attempted to isolate the Piano stem using SAM Audio (Neural Analog) versus a leading competitor.

Example: Piano Extraction Comparison

Isolating a single instrument from a dense stereo mix using the prompt 'piano'.

The Technology Behind SAM Audio

SAM Audio uses a flow-matching diffusion transformer architecture trained on millions of audio mixtures. The model learns what compression destroys and what natural music should sound like, enabling reconstruction of missing harmonic content.

The system operates at RTF ≈ 0.7, processing audio faster than real-time. A 4-minute song extracts in under 3 minutes, even for complex custom instrument requests.

The Neural Audio Encoder: Why Model Architecture Determines Quality

At the heart of every AI stem splitter lies a neural audio encoder. This component transforms raw audio waveforms into compressed representations that capture timbre, pitch, rhythm, and spatial characteristics. Think of it as the model's "ears". It can distinguish a snare drum from a vocal depends entirely on how well this encoder was trained.

Early separation models used simple CNN encoders that processed spectrograms like 2D images. While effective for basic tasks, these encoders struggled with fine timbral details and temporal consistency. Modern models like Demucs employ multi-band encoders that analyze different frequency ranges independently, preserving phase relationships critical for natural sound.

SAM Audio represents a leap forward with its Perception Encoder Audiovisual (PE-AV), trained on over 100 million video clips. This encoder understands the relationship between visual and auditory information. When you prompt it to extract "guitar", PE-AV draws on its knowledge of what guitars look like being played, how they're mic'd in studios, and how their harmonic structure evolves. This multimodal understanding enables more precise separation, especially for instruments that share frequency ranges with other sources.

The encoder's quality directly impacts stem quality in three ways:

- Timbre resolution: Better encoders distinguish harmonics from noise, preserving instrument character

- Temporal coherence: Advanced encoders maintain consistent separation across time, avoiding the "chattering" artifacts common in cheaper tools

- Feature richness: Large encoders capture subtle details like string squeaks, breath sounds, and room ambience

Each new model iteration innovates primarily by improving this encoder architecture. When evaluating stem splitting services, the encoder architecture matters more than marketing claims. A model using a 2020-era encoder cannot match the quality of one built on PE-AV or similar modern architectures, regardless of post-processing tricks.

How Neural Analog Builds on SAM Audio and Demucs

Neural Analog leverages SAM Audio to create a practical pipeline for musicians.

Unlike the 30-second mono SAM Audio official playground, Neural Analog processes complete tracks in full stereo. Stems maintain spatial width and depth essential for modern production.

Neural Analog let's you chose between SAM Audio and the older, battle-tested, Demucs:

- SAM Audio for flexible custom instrument extraction (saxophone, synthesizer, strings)

- Demucs for faster, reliable preset separations (vocals, drums, bass, other)

While you can't select spans (yet) in your audio on Neural Analog, you can prompt freely the model. Join our Discord to explore together the future of stem splitting!

Integrated Audio Restoration Pipeline

You can run extracted stems through our uspcaling architecture to increase frequency range and touch up some of the artifacts.

Better SUNO Stems: Why AI-Generated Music Needs Special Treatment

Suno and other AI music generators (like Udio or Producer.ai) create impressive compositions but output low bitrate MP3s (typically 128-192 kbps). Their training data includes compressed audio, so the models learn to replicate MP3 artifacts. This creates a quality ceiling.

The Double Compression Problem

Extracting stems from Suno MP3s using basic tools separates already-compromised audio. The result has:

- Severe high-frequency loss (often nothing above 15 kHz)

- Quantization noise embedded in the signal

- Reduced stereo width and depth

- Digital "ringing" artifacts

How Restoration Improves AI-Generated Music

The process specifically addresses AI music limitations:

- Initial Extraction: SAM Audio or Demucs separates stems

- Artifact Removal: Neural restoration identifies and removes MP3 compression noise

- Frequency Reconstruction: AI predicts and regenerates harmonic content up to 20 kHz

- Stereo Enhancement: Width and depth are restored based on patterns from professional recordings

The result is SUNO stems that more closely resemble professionally recorded multitracks.

Step-by-Step: How to Get High Quality Stems using Neural Analog

Here's the workflow for consistent results:

Step 1: Upload Audio

Drag and drop your file or paste a link from Suno, Udio, Producer AI, or direct audio URLs. Supported formats include MP3, WAV, FLAC, M4A, and OGG up to 10 minutes.

Step 2: Choose Separation Strategy

For predictable results: Use presets (Vocals, Drums, Bass, Other). These use Demucs and deliver consistent separation.

For custom instruments: Select "Custom Instrument" and type your description. Be specific: "electric guitar solo with distortion" beats "guitar".

Step 3: Configure Processing

Stereo Processing: Default maintains full stereo imaging, processing the Mid section and Sides independently. Change to Mono or to Left/Right processing depending on the instrument.

Step 4: Process and Download

Click "Extract Stems" and wait for processing. Most tracks complete in less than2 minutes. You'll receive individual files for each stem with spectrogram analysis and quality scoring.

Step 5: Restore

Like what you hear? Simply click on "Restore audio" to upscale the audio to a high quality 20Khz WAV.

Technical Considerations for Production Workflows

When integrating stem extraction into your production pipeline, consider these factors:

Source File Quality

While restoration can rebuild compressed files, starting with lossless sources (WAV, FLAC) provides more accurate separation. The encoder has more original information to work with, reducing the amount of reconstruction needed.

If your instrument only appears in a few seconds in a long audio file, we recommend you to cut it before uploading it to only the relevant part. This will make it easier for the model and faster to process.

Stem Phase Coherence

Multiple stems from the same song should sum to the original mix (or close to it). Neural Analog's models maintain phase relationships, ensuring that recombining stems doesn't introduce comb filtering or cancellation. This is critical for stem mastering and remixing.

Artifact Audibility

Listen for common separation artifacts:

- Bleed: Instruments appearing faintly in the wrong stem

- Chattering: Rapid switching on/off during extraction

- Smearing: Loss of transient sharpness

- High-frequency loss: Lack of air and detail

The restoration pipeline addresses many of these, but starting with clean sources minimizes them at the extraction stage. We also recommend you to use a DAW afterwards to cut which part of the stem you like.

When to Use Each Model Type

Use Demucs (Presets) When:

- You need standard vocal, drum, or bass isolation

- Processing speed is critical

- The source mix is relatively clean

- You want predictable, tested results

Use SAM Audio (Custom) When:

- The instrument isn't covered by standard presets

- The mix is dense and overlapping

- You need to separate similar sounds (e.g., lead guitar vs. rhythm guitar)

- The source contains unusual instruments or synthesized sounds

Always Use Restoration When:

- Source is MP3 or streaming audio

- The original file is below 320 kbps

- You're extracting from AI-generated music

- The stems will be used in commercial releases

Getting Started

Start with a test track to familiarize yourself with the workflow. Compare extracted stems against your original mix to verify phase coherence and artifact levels. Once comfortable, integrate stem extraction into your regular production process for remixes, samples, and mix analysis.

With modern AI models and proper restoration, extracted stems can approach the quality of original multitrack sessions, opening new creative possibilities for producers, DJs, and musicians working with any source material.